I am an Assistant Professor in the Department of Computer Science and Engineering at University of Seoul, where I lead the Visual and General Intelligence Lab.

Previously, I was a Researcher at Electronics and Telecommunications Research Institute (ETRI). I received Ph.D. and M.S. degrees in Electrical Engineering from the Korea Advanced Institute of Science and Technology (KAIST), where I was advised by In So Kweon. During my Ph.D. studies, I interned at Adobe Research and was honored with the Qualcomm Innovation Fellowship.

My research aims to build robust multi-modal AI models capable of understanding and generating complex real-world scenarios, with a specific focus on co-designing effective data and learning frameworks. My primary research interests include the following areas, but also open to exploring other challenging and impactful problems.

- Scalable and Efficient Learning

- Multimodal Learning & Data-efficient Learning

- Data-centric AI

- Effective Large-scale Dataset Collection, Generation, and Curation

- Generative AI

- Image/Video Generation & Multi-modal Large Language Models

Contact

-

kwanyong.park [at] uos.ac.kr

pkyong7 [at] kaist.ac.kr

-

163 Seoulsiripdaero, Dongdaemun-gu, Seoul 02504, Republic of Korea

Education

-

PhD, Major in EE, KAIST, 2023

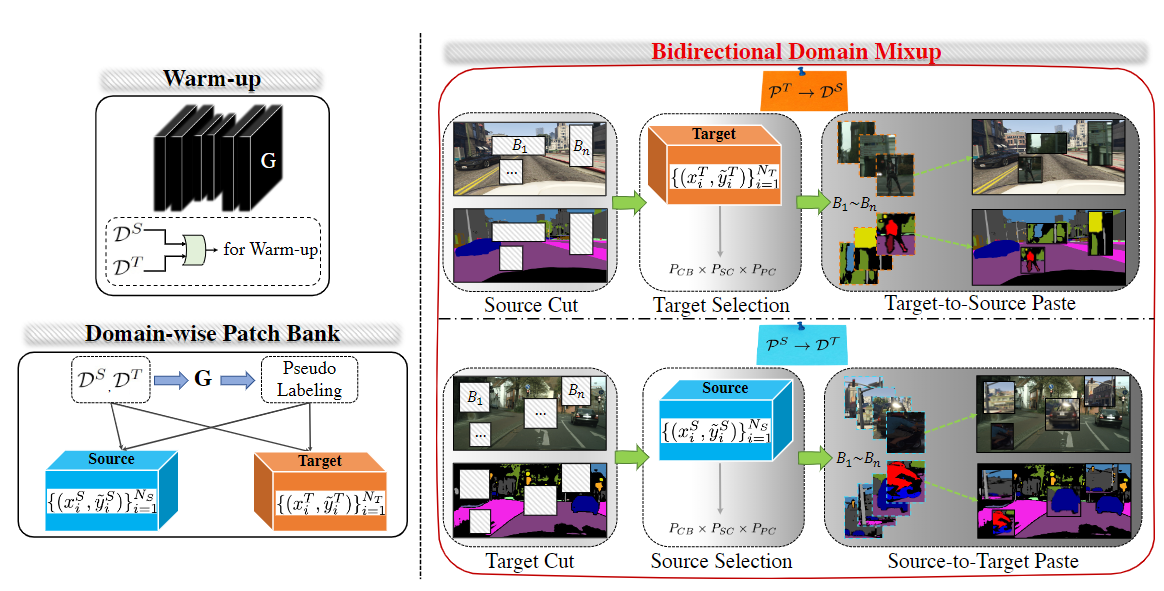

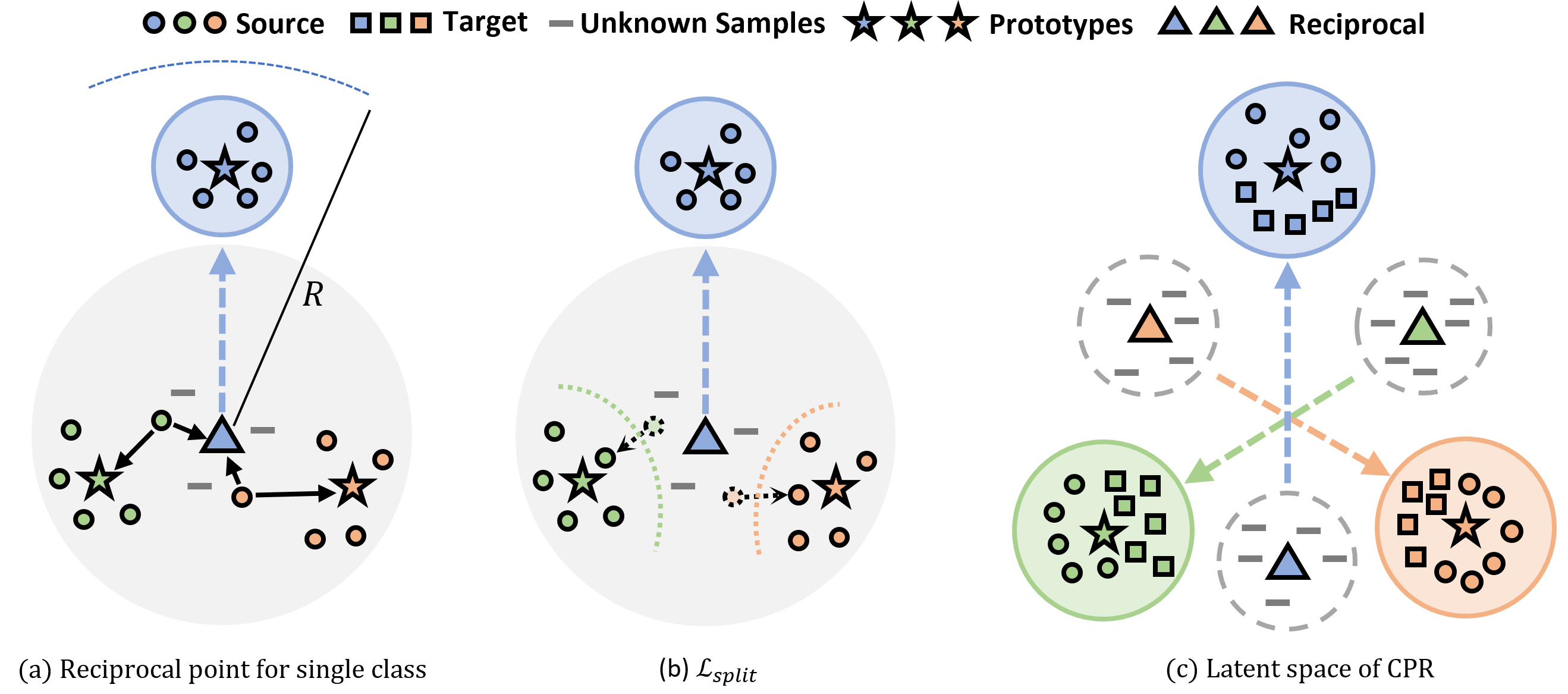

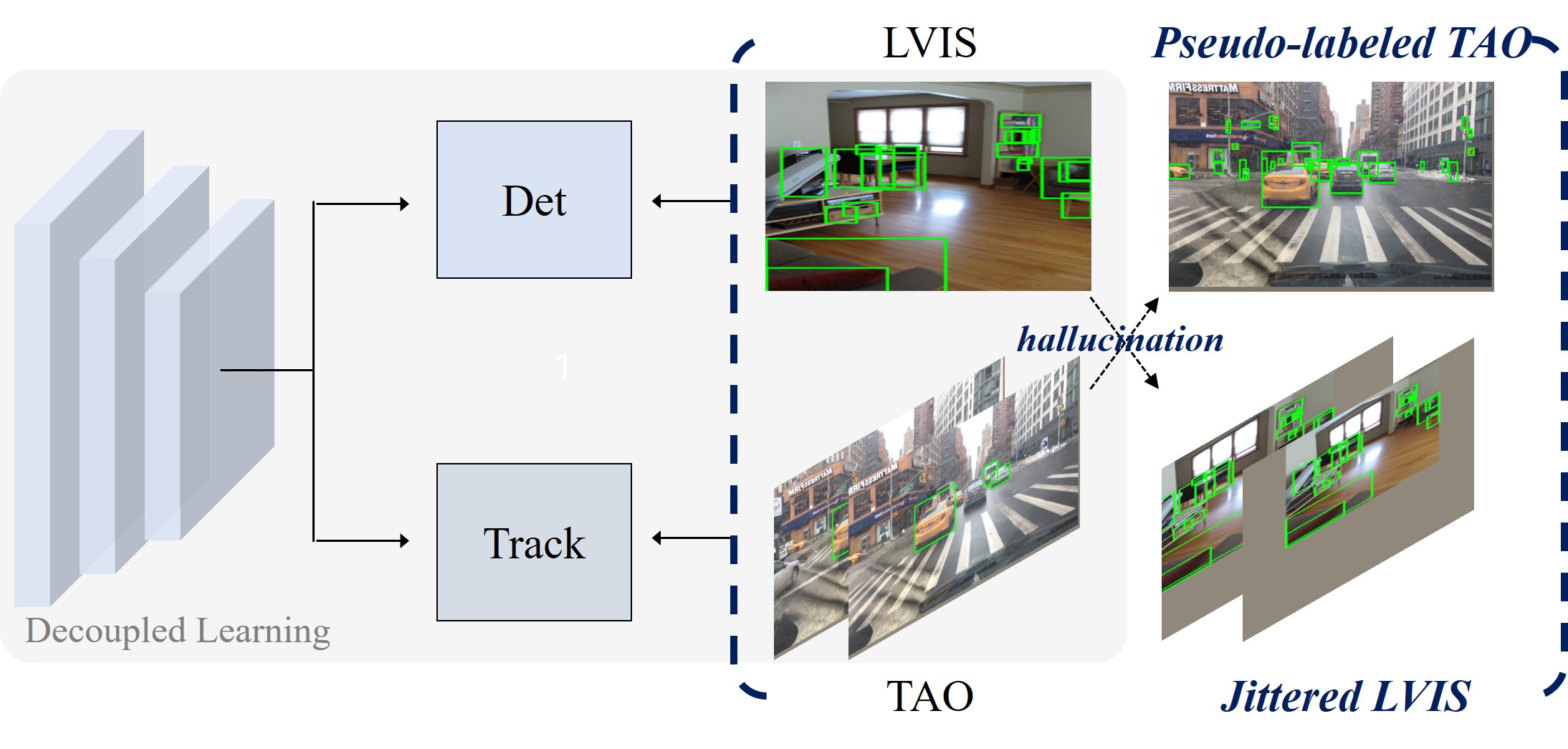

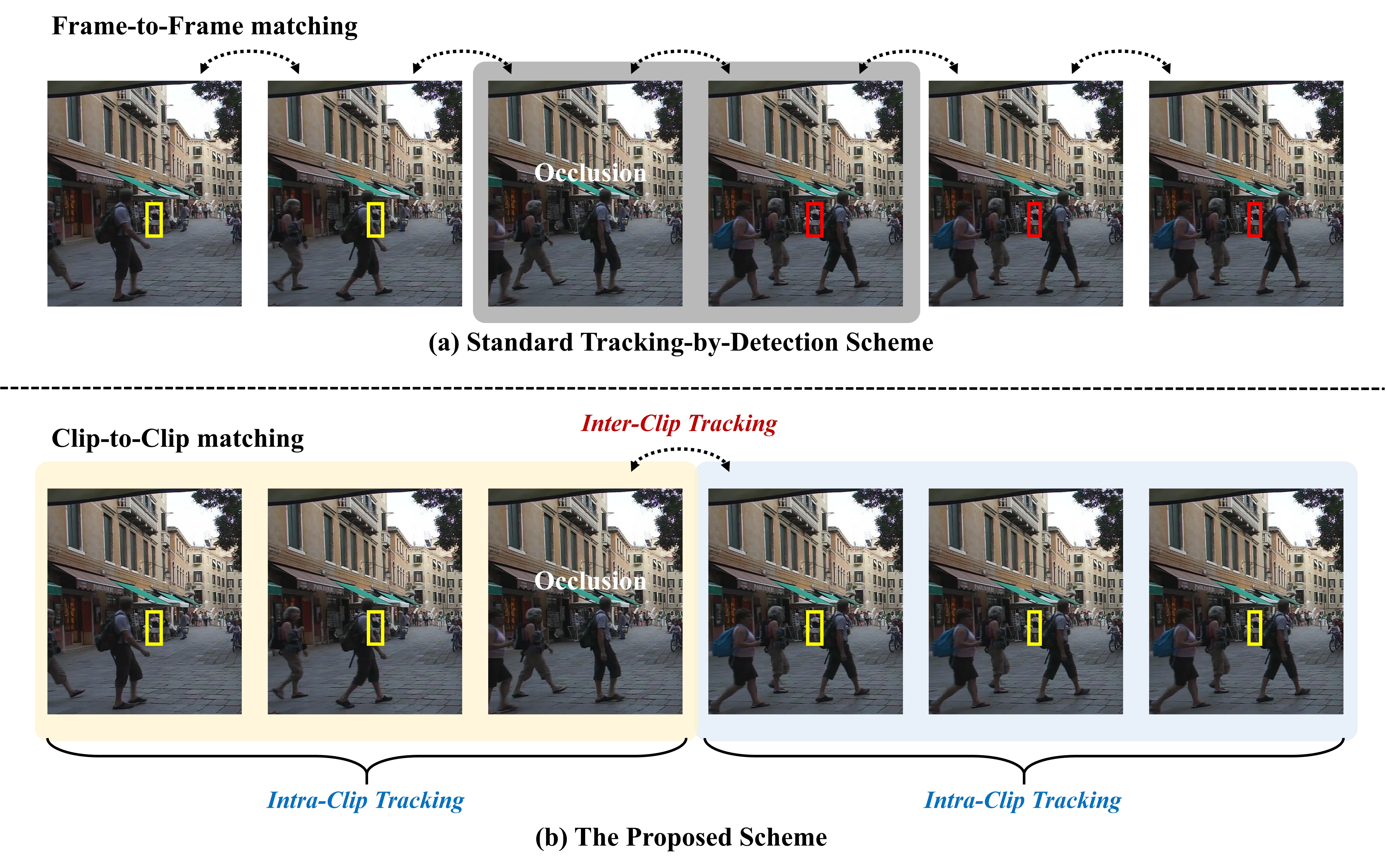

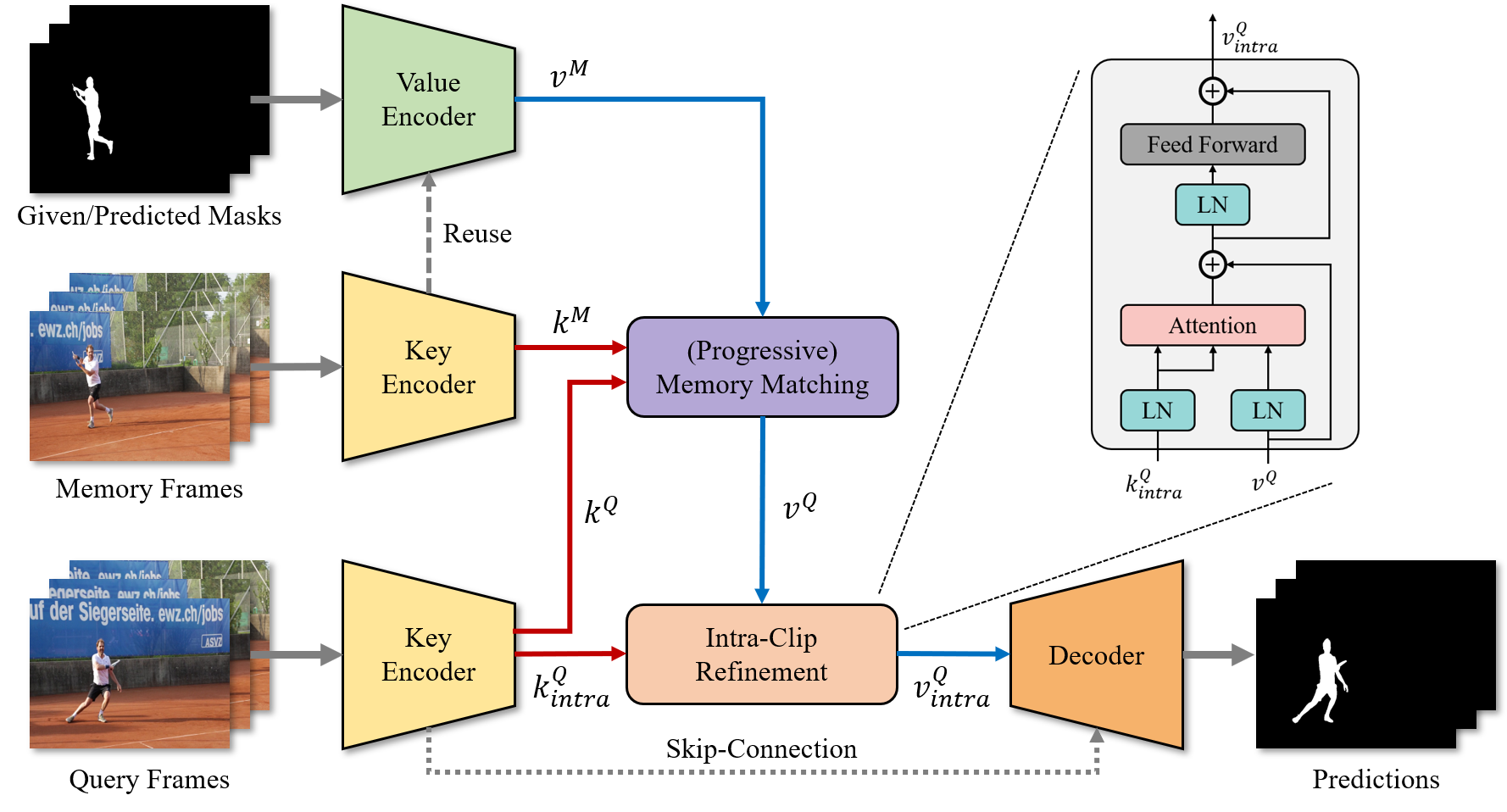

on "Towards Universal Visual Scene Understanding in the Wild"

Advisor: Prof. In So Kweon

-

MS, Major in EE, KAIST, 2019

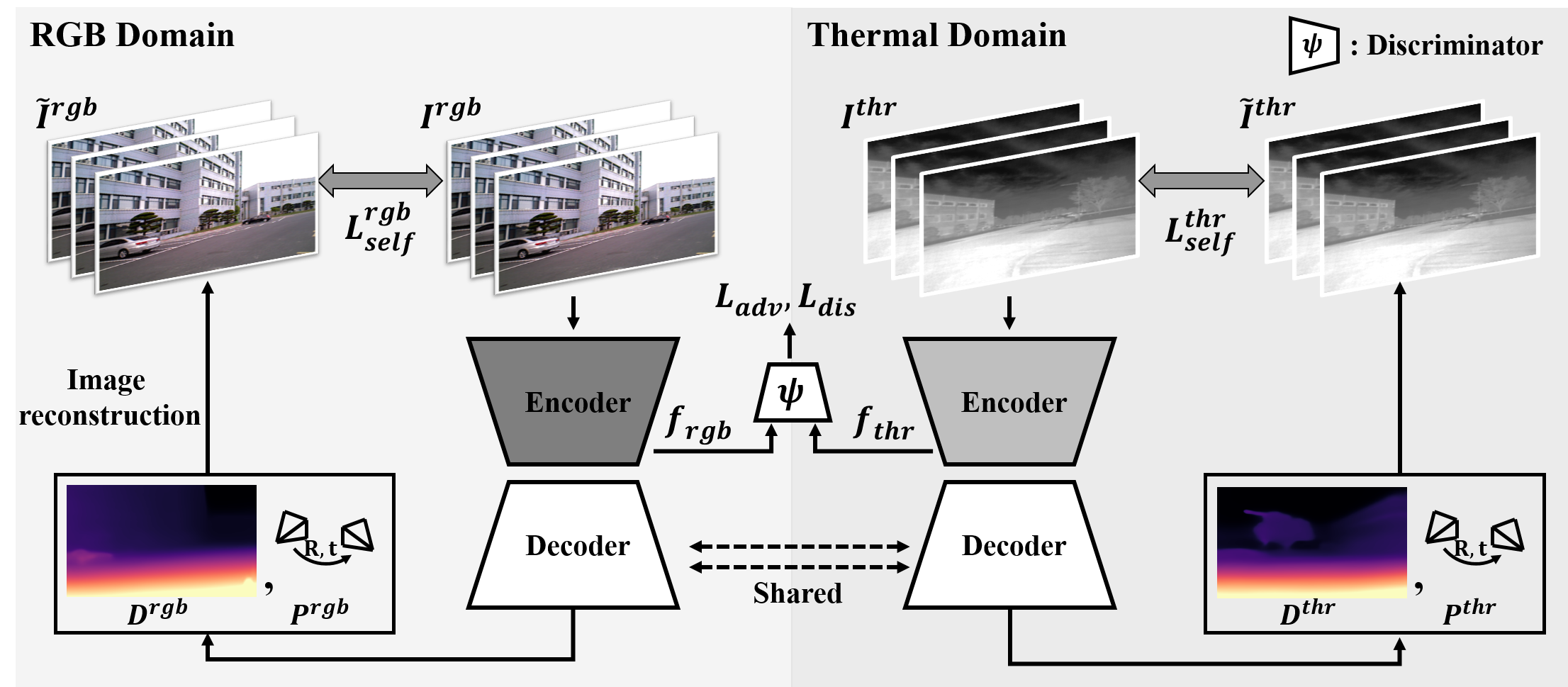

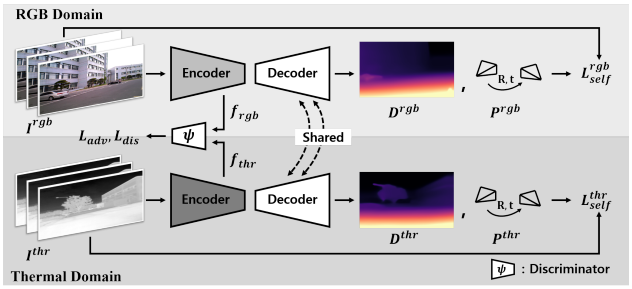

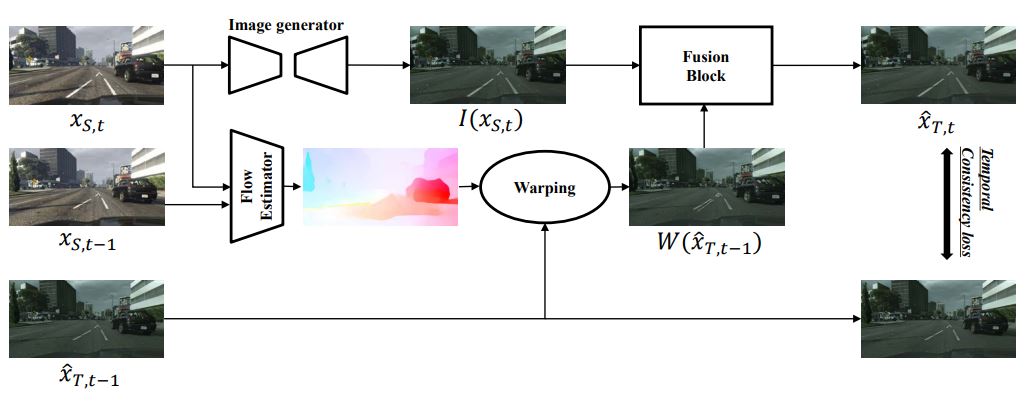

on "Learning unpaired video-to-video translation for domain adaptation"

Advisor: Prof. In So Kweon

-

BS, Double Major in ME and EE, KAIST, 2018